OAT workshop 2022, New Zeeland

Virtual reality researchers and developers need tools to develop state of the art technologies that will help advance knowledge. Promoting open-Source tools which can be modified or redistributed will be of great help for doing this. Open-Source tools are one means to propagate best practice as they lower the barrier to entry for researchers from an engineering point of view, while also embodying the prior work on which the tools were built. Open access tools are also critical to eliminate redundancies and increase world research collaboration in VR. At a time that academic research is growing it also needs to move as fast as industry, collaboration and shared tools are the best way to do it. In this scenario, it is more important than ever that academic research builds upon best practices and results to amplify impact in the broader field.

Driven by uptake in consumer and professional markets, virtual reality technologies are now being developed at a significant pace. Open access tools are also critical to eliminate redundancies and increase world research collaboration in VR. At a time that academic research needs to move as fast as the industry, collaboration and shared tools are the best way to do it. We will gather creators and users of open-access libraries ranging from avatars (like the Microsoft Rocketbox avatar library) to animation to networking (Ubiq toolkit) to explore how open access tools are helping advance the VR community. We invite all researchers to join the second edition of this workshop and learn from existing libraries. We also invite submissions on technical and systems papers that describe new libraries and or new features of existing libraries. In this workshop, we will explore these open-access tools, their accessibility, and what type of applications they enable.

We invite all researchers to join the third edition of this workshop and learn from existing libraries. We also invite submissions on technical and systems papers that describe new libraries and or new features on existing libraries. In this workshop, we will explore these open-access tools, their accessibility, and what type of applications they enable. Finally, we invite users of current libraries to submit their papers and share their learnings while using these tools. All papers and repos will be collected and curated in the workshop website https://openaccess.github.io/

- Open source libraries and tools (from avatars to AI to tracking to networking)

- Research libraries and tools

- New tools, new features, new repos

- Usage of libraries/tools

- Open datasets

Submissions should include a title, a list of authors, and be 2-4 pages. All paper submissions must be in English. All IEEE VR Conference Paper submissions must be prepared in IEEE Computer Society VGTC format ( https://tc.computer.org/vgtc/publications/conference/) and submitted in PDF format. Accepting work of Research papers, Technical notes, Position papers, Work-in-progress papers.

The accepted papers will be featured in the IEEE Xplore library. Additional pages can be considered on a case by case basis, but you should check with the workshop organizers before the submission deadline. Acceptable paper types are work-in-progress, research papers, position papers, or commentaries. Submissions will be reviewed by the organisers and accepted submissions will give a 10-minute talk with a panel discussion at the end of the session. At least one author must register for the workshop. Selected submissions will get the opportunity to be extended to articles to be considered for a special issue.

To submit your work, visit https://new.precisionconference.com/vr

The organizers will review all the submissions.- Submission deadline: January 12th, 2024

- Notification of acceptance: January 18th, 2024

- Camera-ready deadline: January 24th, 2024

- The workshop will be in person.

1:30 - 1:55 PM

Keynote speaker Dr. Ryan McMahan

Break

Workshop presentations

-

2:00PM - 2:15 PM

Do, Tiffany

The Influence of Mixed-Gender Avatar Facial Features on Racial Perception: Insights from the VALID Avatar Library -

2:15 PM - 2:30 PM

Mitchell, Kenny

DanceMark: An open telemetry framework for latency-sensitive real-time networked immersive experiences -

2:30 PM - 2:45 PM

Sterna, Radoslaw

The Free Viewing VR presentation tool -

2:45 - 3:00 PM

Marfia, Gustavo

Flying in XR: Bridging Desktop applications in eXtended Reality through Deep Learning -

3:00 PM - 3:15 PM

Murray, John

Discovering the Metaverses: Towards Open Federated Immersive Authoring Platforms -

3:15 PM - 3:25 PM

Wang, Cheng Yao

CollabXR: Bridging Realities in Collaborative Workspaces with Dynamic Plugin and Collaborative Tools Integration -

3:25 PM - 3:30 PM

Gonzalez, J Eric

XDTK: A Cross-Device Toolkit for Input & Interaction in XR -

Food break

3:30 - 4:00 PM -

4:00 PM - 4:15 PM

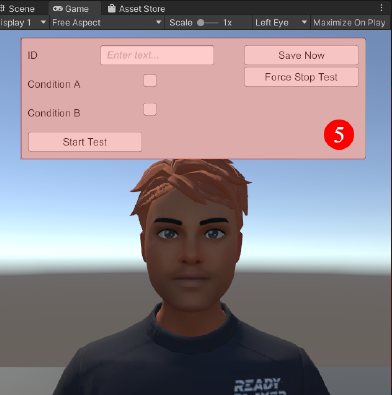

Ehret, Jonathan

StudyFramework: Comfortably Setting up and Conducting Factorial-Design Studies Using the Unreal Engine -

4:15 PM - 4:30 PM

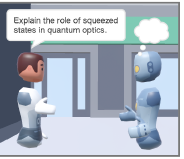

Andrist, Sean

SIGMA: An Open Source Interactive System for Mixed-Reality Task Assistance Research -

4:30 PM - 4:45

Bovo, Riccardo

WindowMirror: an opensource toolkit to bring interactive multi-windowviews into XR -

4:45 PM - 5:00 PM

Workshop end by organizers

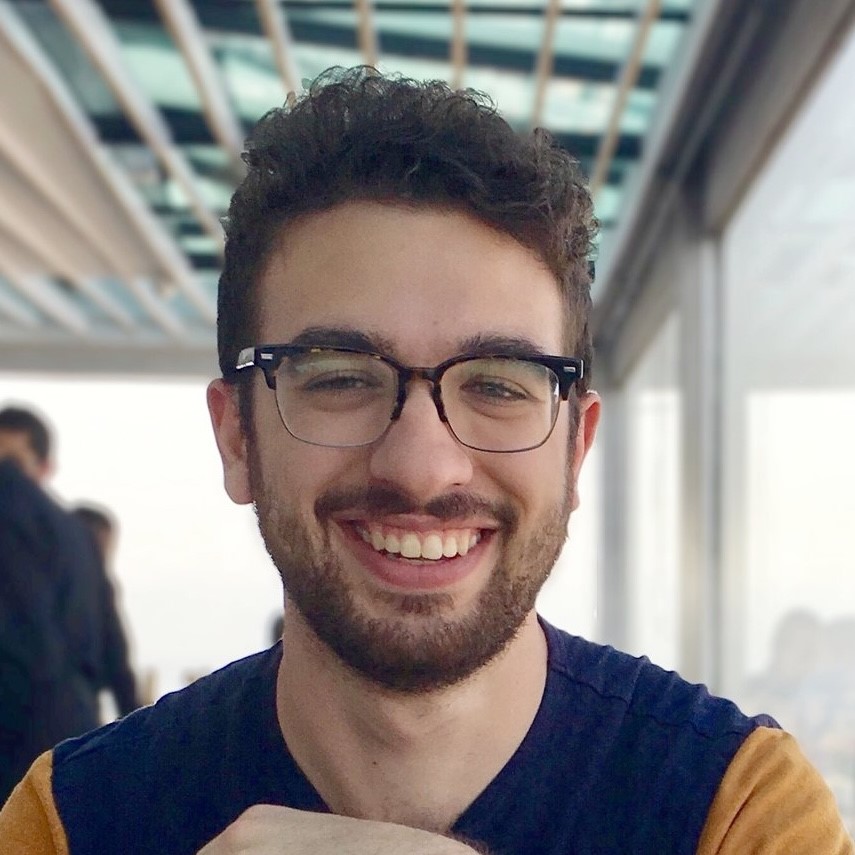

Matias Volonte, Clemson University

mvolont@clemson.edu

Matias Volonte is an Assistant Professor at Clemson University. Currently, Matias is investigating the use of virtual agents in which human-agent relationships improve task outcomes in the healthcare domain. Matias is interested in researching simulated face-to-face conversations with an emphasis on the relational and conversational aspects of these interactions. In his Ph.D. at Clemson University,

Anthony Steed, University College London

a.steed@ucl.ac.uk @anthony_steed

Prof. Anthony Steed is Head of the Virtual Environments and Computer Graphics (VECG) group at University College London. Prof. Steed’s research interests extend from virtual reality systems, through to mobile mixed-reality systems, and from system development through to measures of user response to virtual content. He has worked extensively on systems for collaborative mixed reality. He is lead author of a review “Networked Graphics: Building Networked Graphics and Networked Games”. He was the recipient of the IEEE VGTC’s 2016 Virtual Reality Technical Achievement Award.

Roshan Venkatakrishnan, University of Florida

rvenkatakrishnan@ufl.edu

Roshan Venkatakrishnan is a Research Associate in the Department of Computer and Information Science and Engineering at the University of Florida. He uses a human-centered approach leveraging virtual, augmented, and mixed reality environments to understand human perception and action. Roshan holds a Ph.D. in Human-Centered Computing from Clemson University where he specialized in researching user representations and their effects on the perception of action capabilities in the near field. Broadly speaking, his research interests spans topics including self-avatars and embodiment; depth, auditory, size, and affordance perception; and cybersickness in these immersive mediums.

Bala Kumaravel, Microsoft

bala.kumaravel@microsoft.com

He is a senior researcher at Microsoft Research, Redmond at the EPIC team(opens in new tab). He works on leveraging Generative AI models (LLMs and Diffusion models) to enhance user productivity and collaboration in business-critical applications. He is particularly interested in customizing, finetuning, and aligning generative AI models for specific end-user applications. Before joining Microsoft, he completed my Ph.D. at the University of California, Berkeley.

Hasti Seifi, Arizona State University

Hasti.Seifi@asu.edu

Dr. Seifi is an assistant professor in the School of Computing and Augmented Intelligence at Arizona State University. Previously, she was at the University of Copenhagen and Max Planck Institute for Intelligent Systems. Dr. Hasti aims to democratize access to emerging technologies such as haptics, VR/AR, and robotics. She has helped create open-source datasets, interactive visualizations, and educational content for VR, haptics, and physical HRI such as LocomotionVault, Haptiedia, VibViz, LearnHaptics, and RobotHand

Eric Gonzalez, Google

ejgonz@google.com

Eric Gonzalez is a researcher at Google in the Blended Interaction Research & Devices (BIRD) Lab. He broadly works on enabling interactions and experiences that bridge the physical and digital in immersive mixed reality. Prior to Google, he completed his PhD at Stanford University working with Prof. Sean Follmer in the SHAPE Lab, where his research focused on augmenting haptic experiences in VR through tangible displays and perceptual illusions.

Yuhang Zhao, University of Wisconsin-Madison

yuhang.zhao@cs.wisc.edu

Dr. Zhao is an assistant professor in the department of Computer Sciences at the University of Wisconsin-Madison. She received her Ph.D. degree from Cornell University. Her research interests include Human-Computer Interaction, accessibility, augmented and virtual reality, and mobile interaction. She designs systems and interaction techniques to empower people with diverse abilities both in real-life and virtual worlds.

Mar Gonzalez Franco, Google

margon@google.com

Mar is a prolific computer scientist and 2022 IEEE VR New researcher awardee. She is deeply involved with creating Open Source tools, and has released the Microsoft Rocketbox avatars, the Movebox and Headbox animation toolkits, Embodiment questionnaires, mods for the AIRSim simulation tool to do crowds (CityLifeSim), code/HW for latency measurement, and more recently AI tools to do segmentation like Google DiffSeg. (all repos are available in https://github.com/margonzalezfranco/)

Inceptor: An Open Source Tool for Automated Creation of 3D Social Scenarios

Dan Pollak, School of Computer Science, RUNI

Jonathan Giron, School of Computer Science, RUNI

Doron Friedman, School of Computer Science, RUNI

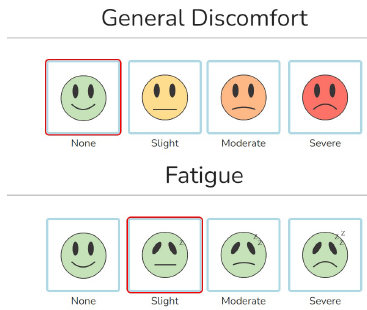

Cybersickness assessment framework(CSAF): An Open Source Repository for Standardized Cybersickness Experiments

Adriano Viegas Milani, Ecole Polytechnique Federale de Lausanne

Nana Tian Ecole Polytechnique Federale de Lausanne

Visualsickness: A web application to record and organize cybersickness data

Elliot Topper, The College of New Jersey

Paula Arroyave, The College of New Jersey

Sharif Mohammad Shahnewaz Ferdous, The College of New Jersey

Streamlining Physiological Observations in Immersive Virtual Reality Studies with the Virtual Reality Scientific Toolkit

Jonas Deuchler, Hochschule Karlsruhe, University of Hohenheim

Wladimir Hettmann, Hochschule Karlsruhe, University of Hohenheim

Daniel Hepperle, Hochschule Karlsruhe, University of Hohenheim

Matthias Wolfel, Hochschule Karlsruhe, University of Hohenheim

Semi-Automatic Construction of Virtual Reality Environment for Highway Work Zone Training using Open-Source Tools

Can Li, University of Missouri

Zhu Qing, University of Missouri

Praveen Edara, University of Missouri

Carlos Sun, University of Missouri

Bimal Balakrishnan, Mississippi State University

Yi Shang, University of Missouri

A Preliminary Interview: Understanding XR Developers’ Needs towards Open-Source Accessibility Support

Tiger F. Ji, University of Wisconsin-Madison

Yaxin Hu, School of Computer Science, RUNI

Yu Huang, Vanderbilt University

Ruofei Du, Google Labs

Yuhang Zhao, University of Wisconsin-Madison

Ubiq-Genie: Leveraging External Frameworks for Enhanced Social VR Experiences

Nels Numan, University College London

Daniele Giunchi, University College London

Benjamin Congdon, University College London

Anthony Steed, University College London

pdf

pdf Virtual-to-Physical Surface Alignment and Refinement Techniques for Handwriting, Sketching, and Selection in XR

Florian Kern, HCI Group, University of Wurzburg

Jonathan Tschanter, HCI Group, University of Wurzburg

Marc Erich Latoschik, HCI Group, University of Wurzburg

pdf

pdf Data Capture, Analysis and Understanding

Rag-Rug: An Open Source Toolkit for

Situated Visualization and Analytics

Dieter Schmalstieg, Philipp Fleck

(Invited from TVCG paper)

https://github.com/philfleck/ragrug

Developing Mixed Reality Applications with Platform for

Situated Intelligence

Sean Andrist, Dan Bohus, Ashley Feniello, Nick Saw

https://github.com/microsoft/psi

STAG: A Tool for realtime Replay

and Analysis of Spatial Trajectory and Gaze Information captured in Immersive

Environments

Aryabrata Basu

Excite-O-Meter: an Open-Source

Unity Plugin to Analyze Heart Activity and Movement Trajectories in Custom VR

Environments

Luis Quintero, Panagiotis Papapetrou, John

Edison Muñoz Cardona, Jeroen de De

mooij, Michael Gaebler

https://github.com/luiseduve/exciteometer/

Perception and Cognition

An Open Platform for Research about Cognitive Load in

Virtual Reality

Olivier Augereau, Gabriel Brocheton, Pedro

Paulo Do Prado Neto

https://git.enib.fr/g7broche/vr-ppe

https://git.enib.fr/g7broche/vr-ppe

Human Vision vs. Computer Vision: A Readability Study in a

Virtual Reality Environment

Zhu Qing, Praveen Edara

Asymmetric Normalization in Social Virtual Reality Studies

Jonas Deuchler, Daniel Hepperle, Matthias

Wölfel

Authoring and Access

BabiaXR: Virtual Reality software

data visualizations for the Web

David Moreno-Lumbreras,

Jesus M. Gonzalez-Barahona, Andrea Villaverde

https://babiaxr.gitlab.io/

https://babiaxr.gitlab.io/

RealityFlow: Open-Source

Multi-User Immersive Authoring

John T Murray

NUI-SpatialMarkers: AR Spatial

Markers For the Rest of Us

Alex G Karduna, Adam Sinclair

Williams, Francisco Raul Ortega

Avatar Tools

Physics-based character animation for Virtual Reality

Joan Llobera, Caecilia Charbonnier

https://joanllobera.github.io/marathon-envs/

https://joanllobera.github.io/marathon-envs/

HeadBox: A Facial Blendshape Animation Toolkit for the Microsoft Rocketbox Library

Matias Volonte, Eyal

Ofek, Ken Jakubzak, Shawn Bruner, Mar Gonzalez-Franco

https://github.com/openVRlab/Headbox

Integrating Rocketbox Avatars with

the Ubiq Social VR platform

Lisa Izzouzi, Anthony Steed

https://github.com/UCL-VR/ubiq