OAT workshop 2023, Shanghai, China

OAT workshop 2022, New Zeeland

Virtual reality researchers and developers need tools to develop state of the art technologies that will help advance knowledge. Promoting open-Source tools which can be modified or redistributed will be of great help for doing this. Open-Source tools are one means to propagate best practice as they lower the barrier to entry for researchers from an engineering point of view, while also embodying the prior work on which the tools were built. Open access tools are also critical to eliminate redundancies and increase world research collaboration in VR. At a time that academic research is growing it also needs to move as fast as industry, collaboration and shared tools are the best way to do it. In this scenario, it is more important than ever that academic research builds upon best practices and results to amplify impact in the broader field.

Driven by uptake in consumer and professional markets, virtual reality technologies are now being developed at a significant pace. Open access tools are also critical to eliminate redundancies and increase world research collaboration in VR. At a time that academic research needs to move as fast as the industry, collaboration and shared tools are the best way to do it. We will gather creators and users of open-access libraries ranging from avatars (like the Microsoft Rocketbox avatar library) to animation to networking (Ubiq toolkit) to explore how open access tools are helping advance the VR community. We invite all researchers to join the second edition of this workshop and learn from existing libraries. We also invite submissions on technical and systems papers that describe new libraries and or new features of existing libraries. In this workshop, we will explore these open-access tools, their accessibility, and what type of applications they enable.

We invite all researchers to join the second edition of this workshop and learn from existing libraries. We also invite submissions on technical and systems papers that describe new libraries and or new features on existing libraries. In this workshop, we will explore these open-access tools, their accessibility, and what type of applications they enable. Finally, we invite users of current libraries to submit their papers and share their learnings while using these tools. All papers and repos will be collected and curated in the workshop website https://openaccess.github.io/

- Open source libraries and tools (from avatars to AI to tracking to networking)

- Research libraries and tools

- New tools, new features, new repos

- Usage of libraries/tools

- Open datasets

Submissions should include a title, a list of authors, and be 2-4 pages. All paper submissions must be in English. All IEEE VR Conference Paper submissions must be prepared in IEEE Computer Society VGTC format ( https://tc.computer.org/vgtc/publications/conference/) and submitted in PDF format. Accepting work of Research papers, Technical notes, Position papers, Work-in-progress papers.

The accepted papers will be featured in the IEEE Xplore library. Additional pages can be considered on a case by case basis, but you should check with the workshop organizers (m.volonte@northeastern.edu.) before the submission deadline. Acceptable paper types are work-in-progress, research papers, position papers, or commentaries. Submissions will be reviewed by the organisers and accepted submissions will give a 10-minute talk with a panel discussion at the end of the session. At least one author must register for the workshop. Selected submissions will get the opportunity to be extended to articles to be considered for a special issue.

To submit your work, visit https://new.precisionconference.com/vr

The organizers will review all the submissions.- Submission deadline: 25th January

- Notification of acceptance: 28th January

- Camera-ready deadline: 1st February

- Expected workshop date: 25th March 2023

- The workshop will be online: we will use Zoom and chat systems like Slack.

- 14:00-0:15: Introduction by the organizers

- 14:15-14:30: Keynote- Dr. Anthony Steed

- 14:30-16:00: Short presentations of the accepted papers: 10 min per submission + discussion

- 16:00-16:15: Discussion in the breakout rooms

- 16:15-16:30: Closing and call for getting involved in Open Sourcing efforts

Matias Volonte, Northeastern University

m.volonte@northeastern.edu

Dr. Matias Volonte is currently a Postdoctoral Research Associate at the Department of Computer Science at Northeastern University. He is working with Dr. Tim Bickmore from the Relational Agents Group. Currently, Matias is investigating the use of virtual agents in which human-agent relationships improve task outcomes in the healthcare domain. Matias is interested in researching simulated face-to-face conversations with an emphasis on the relational and conversational aspects of these interactions. In his Ph.D. at Clemson University, Matias studied human and virtual human interaction in immersive and non-immersive environments with Dr. Sabarish Babu.

Mar Gonzalez Franco, Google

margon@google.com

She explores human behaviour and perception to build better technologies in the wild. She has a prolific scientific output (website), and her work has transferred to products used daily around the world, like Hololens, Microsoft Soundscape and Together mode in Microsoft Teams. Mar is a Computer Scientist and holds a PhD in Immersive Virtual Reality and Clinical Psychology from Universitat de Barcelona. Before joining Google, she was at Microsoft, Airbus, the MIT, and University College London.

Eyal Ofek, Microsoft Research

eyalofek@microsoft.com

Dr. Ofek is a Principal Researcher at Microsoft Research and a senior member of the ACM. His research interests include Augmented Reality (AR)/Virtual Reality (VR), Haptics, interactive projection for the area of Haptics. He holds a Ph.D in Computer Vision from The Hebrew University of Jerusalem, and was a founder of a couple of companies in the area of computer graphics such as the successful drawing application and developing the world’s first time-of-flight video camera which was a basis for the HoloLens depth camera.

Andrew Duchowski, Clemson University

aduchow@clemson.edu

Dr. Duchowski received his B.Sc. ('90) and Ph.D. ('97) degrees in Computer Science from Simon Fraser University, Burnaby, Canada, and Texas A&M University, College Station, TX, respectively. Dr. Duchowski's research and teaching interests include visual attention and perception, eye movements and eye tracking, computer vision, graphics, and virtual environments.

Anthony Steed, University College London

a.steed@ucl.ac.uk

Dr. Steed is Head of the Virtual Environments and Computer Graphics group in the Department of Computer Science at University College London with 30 years of experience in developing effective immersive experiences. While his early work focused on the engineering of displays and software, currently he is focused on user engagement in collaborative and telepresence scenarios. He received the IEEE VGTC’s 2016 Virtual Reality Technical Achievement Award. Dr Steed was a Visiting Researcher at Microsoft Research and an Erskine Fellow at the Human Interface Technology Laboratory in New Zealand.

Hasti Seifi, Arizona State University

Hasti.Seifi@asu.edu

Dr. Seifi is an assistant professor in the School of Computing and Augmented Intelligence at Arizona State University. Previously, she was at the University of Copenhagen and Max Planck Institute for Intelligent Systems. Dr. Hasti aims to democratize access to emerging technologies such as haptics, VR/AR, and robotics. She has helped create open-source datasets, interactive visualizations, and educational content for VR, haptics, and physical HRI such as LocomotionVault, Haptiedia, VibViz, LearnHaptics, and RobotHands.

Yuhang Zhao, University of Wisconsin-Madison

yuhang.zhao@cs.wisc.edu

Dr. Zhao is an assistant professor in the department of Computer Sciences at the University of Wisconsin-Madison. She received her Ph.D. degree from Cornell University. Her research interests include Human-Computer Interaction, accessibility, augmented and virtual reality, and mobile interaction. She designs systems and interaction techniques to empower people with diverse abilities both in real-life and virtual worlds.

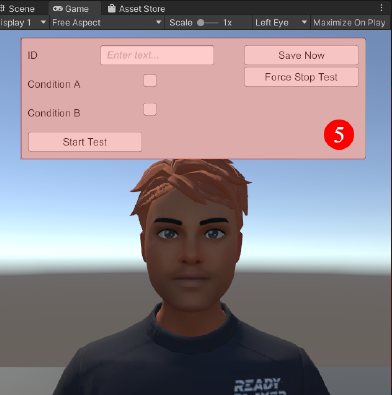

Inceptor: An Open Source Tool for Automated Creation of 3D Social Scenarios

Dan Pollak, School of Computer Science, RUNI

Jonathan Giron, School of Computer Science, RUNI

Doron Friedman, School of Computer Science, RUNI

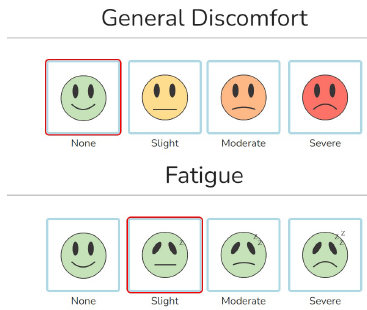

Cybersickness assessment framework(CSAF): An Open Source Repository for Standardized Cybersickness Experiments

Adriano Viegas Milani, Ecole Polytechnique Federale de Lausanne

Nana Tian Ecole Polytechnique Federale de Lausanne

Visualsickness: A web application to record and organize cybersickness data

Elliot Topper, The College of New Jersey

Paula Arroyave, The College of New Jersey

Sharif Mohammad Shahnewaz Ferdous, The College of New Jersey

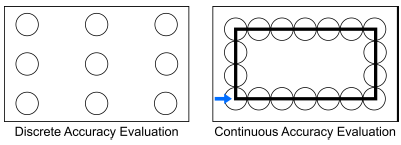

Streamlining Physiological Observations in Immersive Virtual Reality Studies with the Virtual Reality Scientific Toolkit

Jonas Deuchler, Hochschule Karlsruhe, University of Hohenheim

Wladimir Hettmann, Hochschule Karlsruhe, University of Hohenheim

Daniel Hepperle, Hochschule Karlsruhe, University of Hohenheim

Matthias Wolfel, Hochschule Karlsruhe, University of Hohenheim

Semi-Automatic Construction of Virtual Reality Environment for Highway Work Zone Training using Open-Source Tools

Can Li, University of Missouri

Zhu Qing, University of Missouri

Praveen Edara, University of Missouri

Carlos Sun, University of Missouri

Bimal Balakrishnan, Mississippi State University

Yi Shang, University of Missouri

A Preliminary Interview: Understanding XR Developers’ Needs towards Open-Source Accessibility Support

Tiger F. Ji, University of Wisconsin-Madison

Yaxin Hu, School of Computer Science, RUNI

Yu Huang, Vanderbilt University

Ruofei Du, Google Labs

Yuhang Zhao, University of Wisconsin-Madison

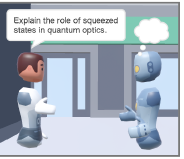

Ubiq-Genie: Leveraging External Frameworks for Enhanced Social VR Experiences

Nels Numan, University College London

Daniele Giunchi, University College London

Benjamin Congdon, University College London

Anthony Steed, University College London

pdf

pdf Virtual-to-Physical Surface Alignment and Refinement Techniques for Handwriting, Sketching, and Selection in XR

Florian Kern, HCI Group, University of Wurzburg

Jonathan Tschanter, HCI Group, University of Wurzburg

Marc Erich Latoschik, HCI Group, University of Wurzburg

pdf

pdf